funny dev dump

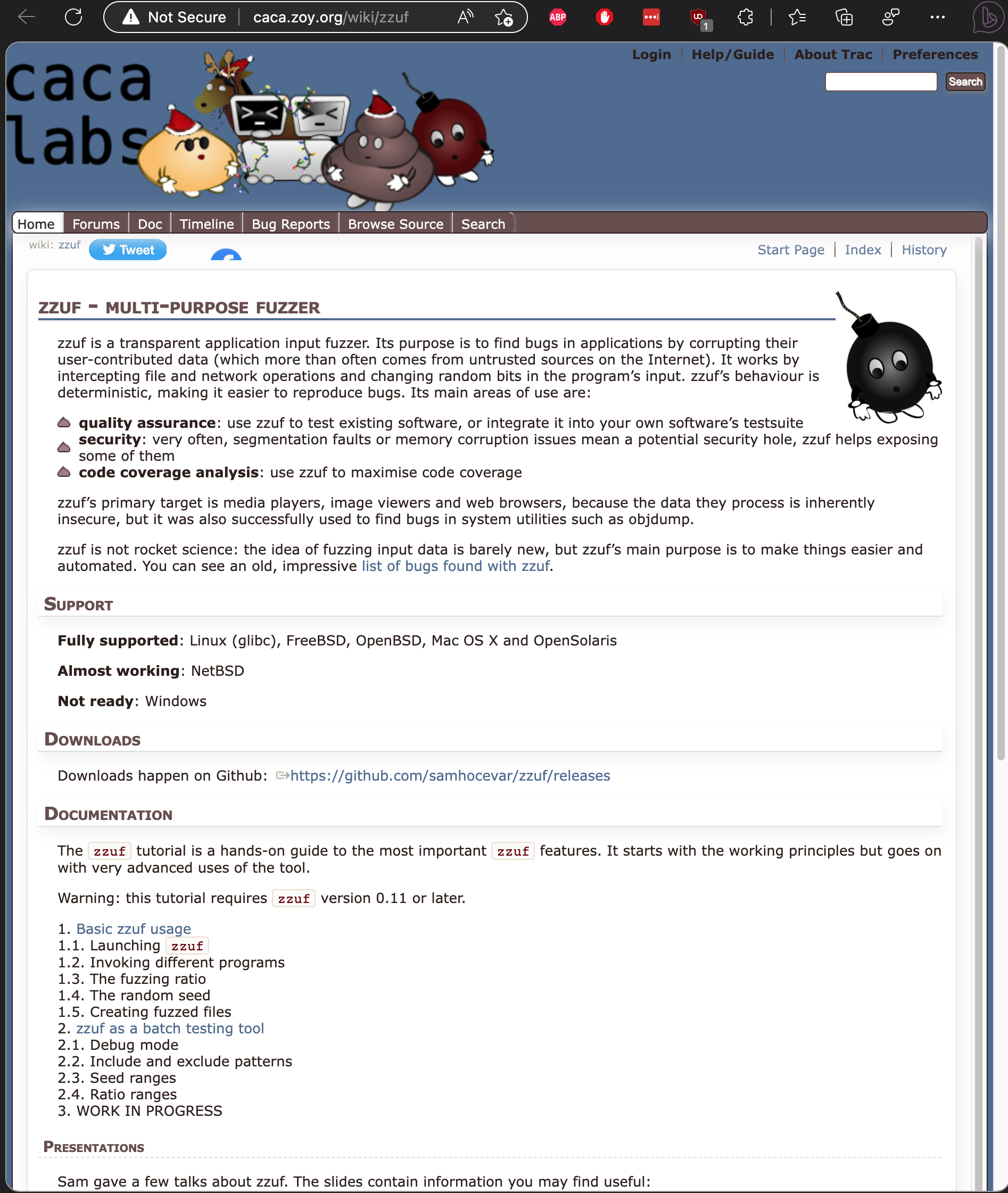

# caca labs

Was browsing random formulae on my lil project breview which is a brew formula visualizer and clicked on a random formula which led me to this:

# npm install verizon

A common joke template for my coworkers and is to say "npm install some-not-tech-word" eg npm install gertrude/doritos/verizon. And literally everytime I've searched it afterwards, it's a package. Try it. This was obvs to make backlinks to improve SEO for some scam. Wonder how quickly npm can deal with that? It's been up for 4 days lol.

I hadn't realized I had a separate item here called /node_modules/death, which was a package I saw when browsing:

here's a test from it:

// the irony of spawn

var spawn = require('win-spawn')

describe('death', function() {

describe('default behavior', function() {

it('should catch SIGINT, SIGTERM, and SIGQUIT and return 3', function(done) {

Aha it's looking for the death of a process... aren't humans but processes of God? jk... unless?

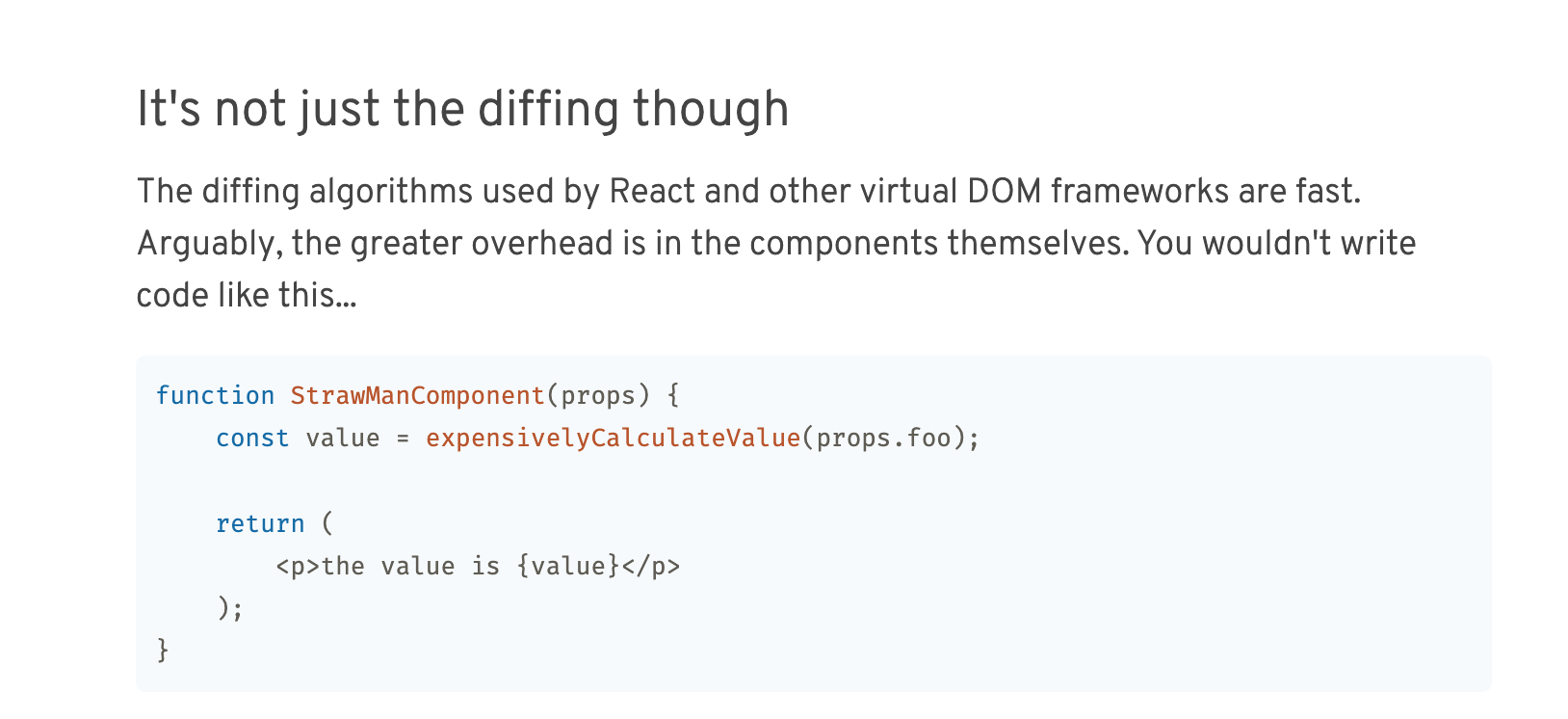

# svelte strawman

Citing that one React talk where the guy was saying "the virtual DOM is fast, the DOM is slow" (...) they give a code example about why virtual DOMs are... slow and their component name is funny:

# me've

# readability cope

Was browsing the source code of safari's reader mode and found this comment by a disgruntled mozilla eng

function Readability(doc, options) {

// In some older versions, people passed a URI as the first argument. Cope:

if (options && options.documentElement) {

doc = options;

options = arguments\[2];

} else if (!doc || !doc.documentElement) {

throw new Error("First argument to Readability constructor should be a document object.");

}

options = options || {};

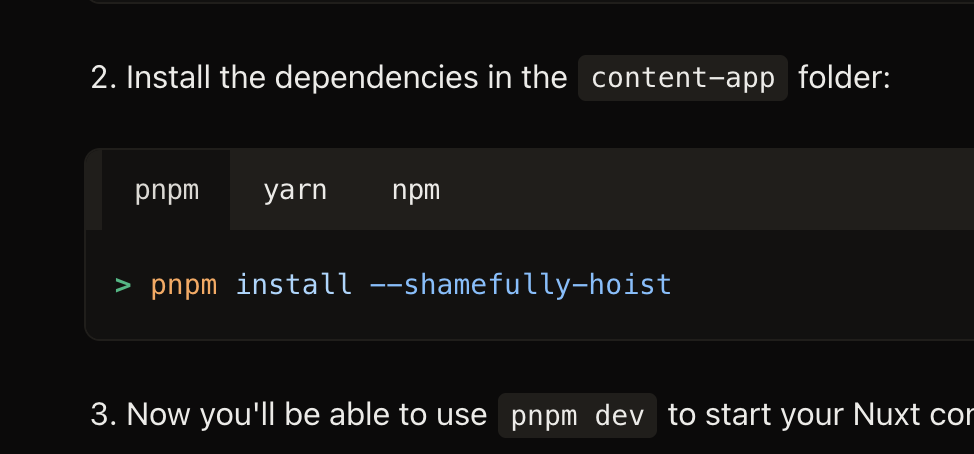

# bizarre flag

Maybe this is just some convention that I've never heard of but...

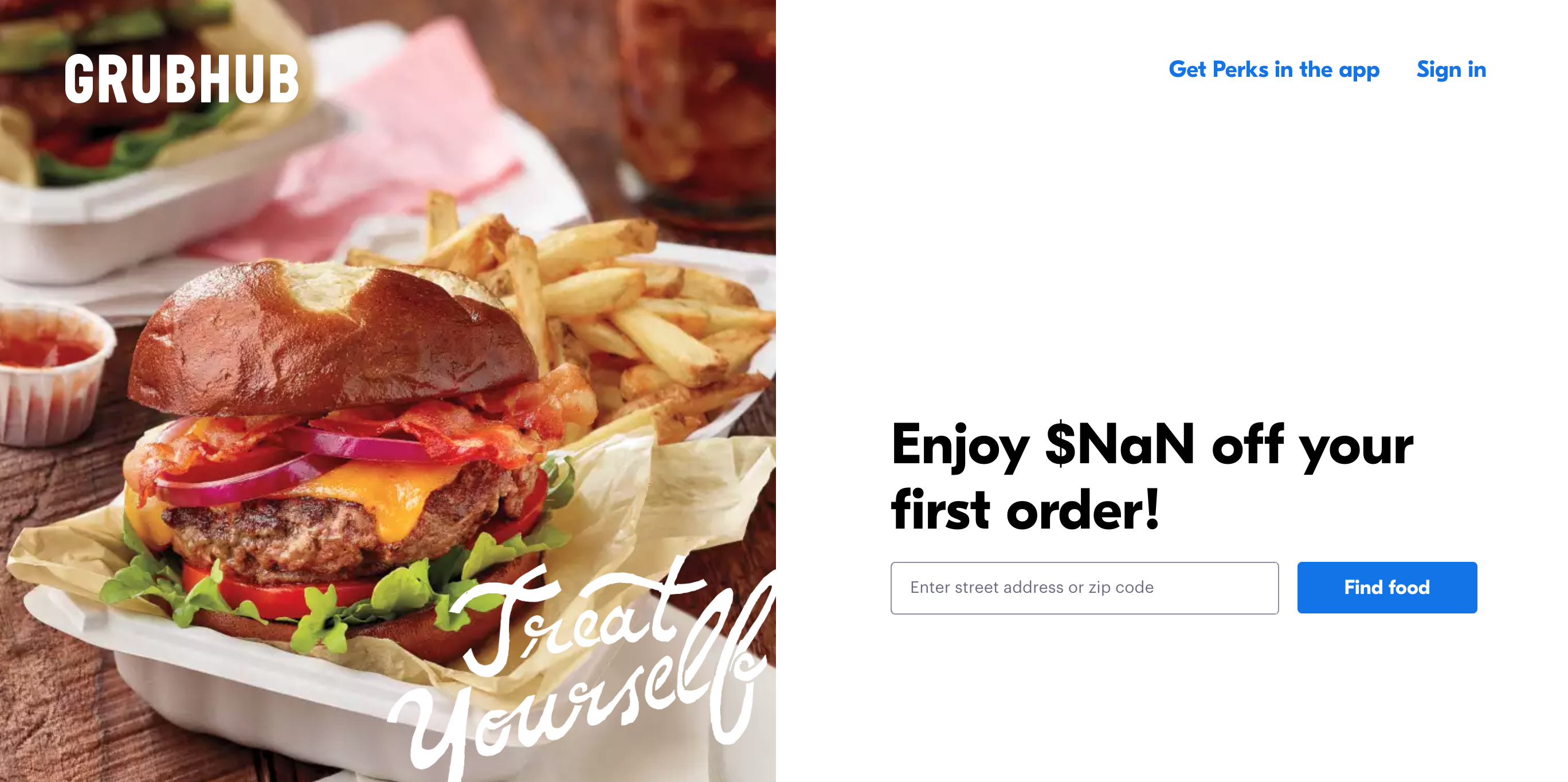

# enjoy NaN

# neural network talk to transformer

some truly darksided results for my name apparently

talk to transformer (opens new window)

Built by Adam King (@AdamDanielKing) as an easier way to play with OpenAI's new machine learning model. In February, OpenAI unveiled a language model called GPT-2 that generates coherent paragraphs of text one word at a time.

This site runs the full-sized GPT-2 model, called 1558M. Before November 1, OpenAI had only released three smaller, less coherent versions of the model.

While GPT-2 was only trained to predict the next word in a text, it surprisingly learned basic competence in some tasks like translating between languages and answering questions. That's without ever being told that it would be evaluated on those tasks. To learn more, read OpenAI's blog post or follow me on Twitter.

# 1,500 lbs

# asynchronie

# 212

# tcrapc

# amaze pers website

Amazing Kmart style photoshoot for portfolio website where it's unclear if /s? engelschall.com (opens new window)

# outta bounds

# killall children

# classique

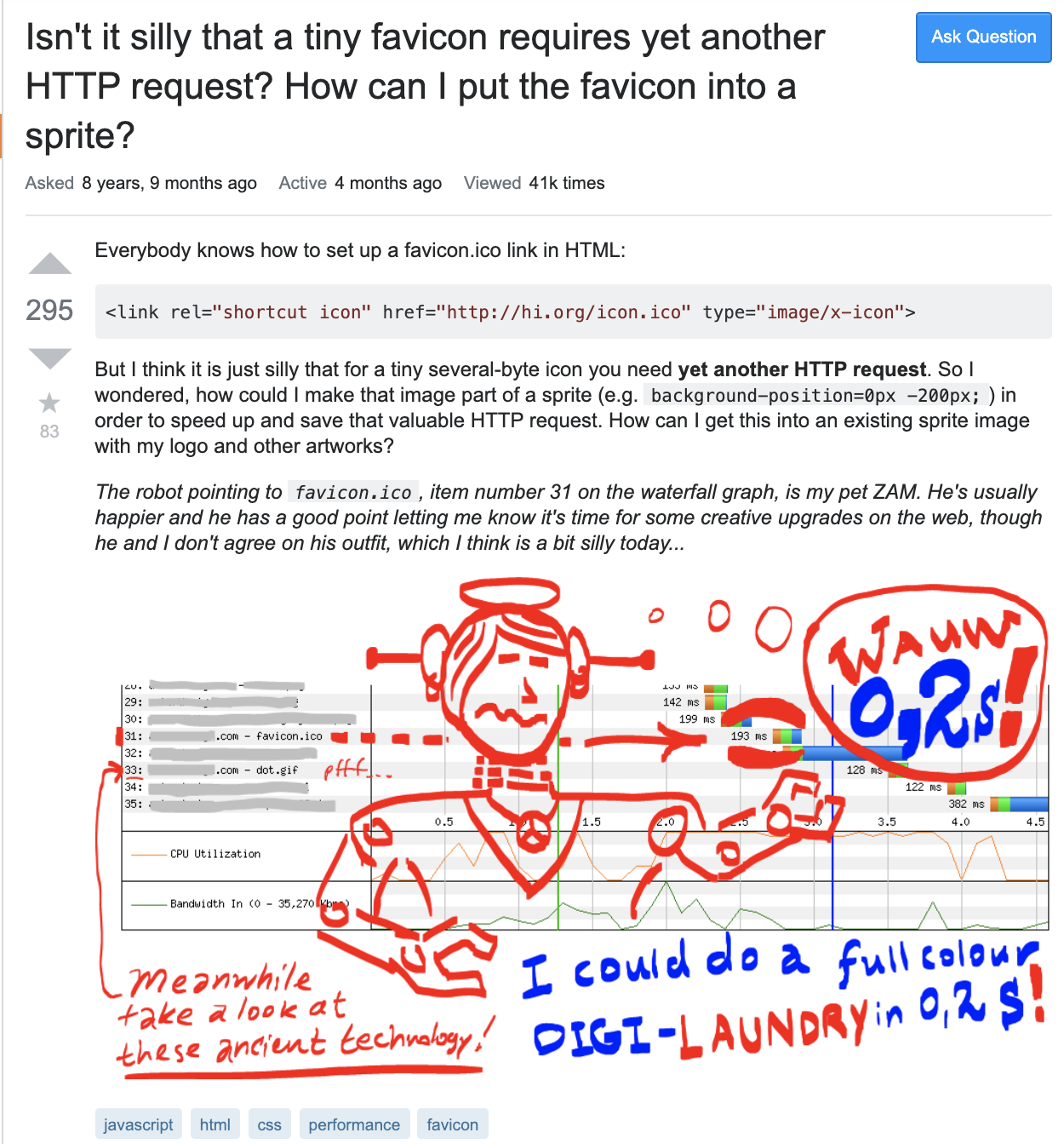

# someone's mad bout favicons

# CMSsSsSsS

was looking at different CMSs and Prismic had this review from a client: